Apache Spark is the new buzz word in the Data Engineering world, specially due to it lightning fast speed compared to its elder cousin Hadoop Map Reduce. This fame is also due to its integration with famous programming languages like Scala, Python, Java and R. Originally written in Scala which is a functional language, Spark support is also provided by Python, Java and R which gives it the attention of a larger audience.

PySpark is the library provided by Python community for Spark programming and is quite popular due to its huge and rising developer support. Also as python is considered one of the best languages to know for data analysis and engineering, Spark support of python makes a data guys life much more easier. One of the first thing, every developer wants is a good development environment for PySpark development and the answer for this is JetBrains PyCharm IDE. PyCharm as its name suggests is one of the best and charming IDE for python focused development. So , this makes its best suited for PySpark development. JetBrains provides two versions of this IDE, one is the paid version and the other is Community Edition which is more than enough for us to get started in Spark.

Here is how you should configure PyCharm Community Edition to work with PySpark.

Prerequisites

- You should have python installed in your system . As of now the latest version of python available is 3.7.0 .You can choose the latest version as on the date and time you are reading this blog and download it from here

- While installing the python , the installation will prompt you whether to add the python to your Path. Check this box.

- You should have hadoop_home setup in your path. You can simply download winutils.exe for the hadoop version you have downloaded the Spark.

- I expect you would have already downloaded the latest version of Apache Spark and added the home in the environmental variables.You can download it from here

Setup PyCharm for running PySpark

- Open up the PyCharm Community Edition in your system and create a normal Python project.Make sure to choose the right python interpreter.

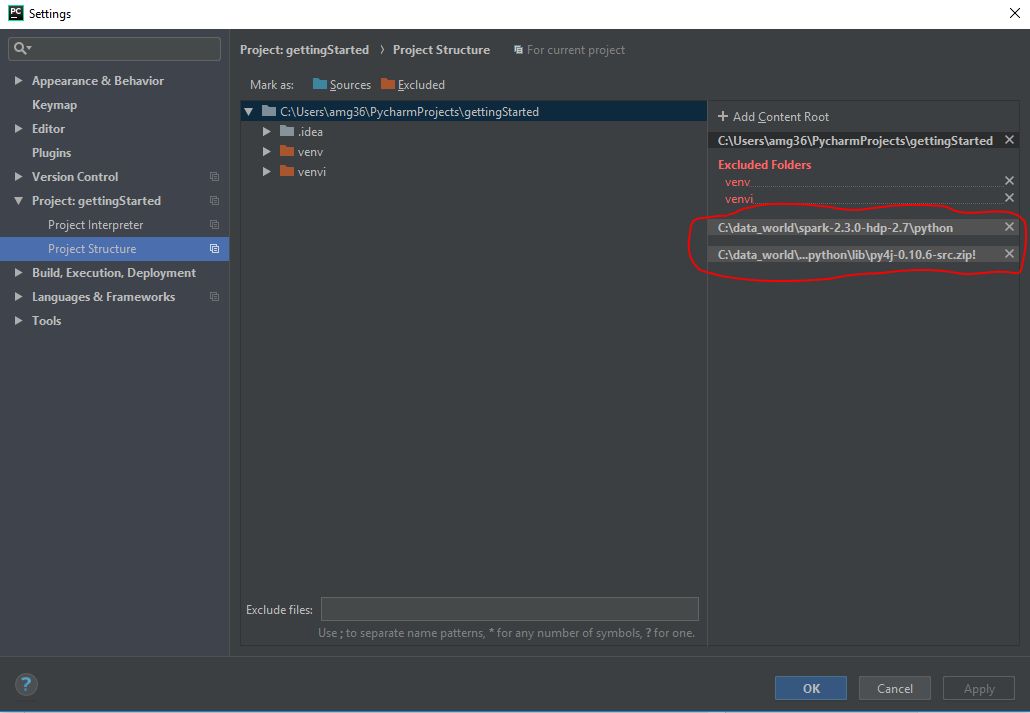

- Now navigate to File >> Settings >> Go to your Project >> Project Structure

- By default , you wont be seeing the marked items in the screen. So we are going to get it in your projects

- Click on Add Content Root

- Now choose the python folder inside your Spark Home Folder

- Click on Add Content Root again and now choose py4j-version-src.zip file inside of your Spark_Home >> Python >> lib

- Click on Apply and that's it !! . Pycharm will now automatically link all the external libraries you have specified for use in your new project.

- You may now go on and import your spark libraries and start developing your PySpark application !!

No comments:

Post a Comment